Mainframe Medical Database

Objective

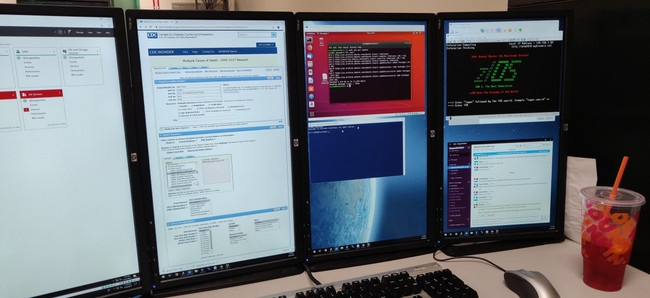

The purpose of this project is to create a medical database within a mainframe environment. In order to accomplish this task, we will be gathering readily available data from government regulated websites. This data must be in the form of a .CSV file, and will be transferred to a mainframe environment through FTP. Meanwhile, we will simultaneously be creating a logical model of our database using ERwin Data Modeling software. From here we will use DDL to create a DB2 mainframe database to store our acquired data. Finally, we will use SQL queries to analyze this database to look for interesting trends, patterns, and statistics, and ultimately use FTP to bring those results out of the mainframe environment into a user-friendly “front-end” software.

Meeting

Monday-Thursday 10:00am-12:00pm / 1:00pm-3:00pm in room S-243

Students

- Tyler Zamski — tzamski (at) me.bergen.edu

- Andres Gonzalez — agonzalez114138 (at) me.bergen.edu

- Michael Hernandez — mhernandez113775 (at) me.bergen.edu

- Caitlin Mooney — cmooney (at) me.bergen.edu

Mentor(s)

- Professor Alan S. Eliscu — aeliscu (at) bergen.edu